Extra Materials:

In this episode, we talked about some of the theory that underlies our current understanding of the structure of natural languages. When we look at language through the lens of the rules that generate it (e.g., IP → NP I’, I’ → I VP), we’re able to give language a very precise description in the form of a generative grammar. A generative grammar is kind of like a machine that churns out sentences according to the rules that make it up, and it can be used to talk about what we know when we know a language.

When a language is given form as a generative grammar, we can say that language has been formalized, and we can now talk about that language in terms of formal language theory. That means we can we can finally begin to answer questions about how sophisticated a language is, and how it compares to other languages — either real or imagined.

Now, the study of formal languages is something that’s useful not only to linguists, but to researchers in other fields as well. Theoretical computer science, for example, has made significant use of the study of formal languages, as well as the Chomsky hierarchy and its ranking of languages from simple to complex. And this is no coincidence: formal language theory and, in particular, the Chomsky hierarchy, bear a very close relationship to automata theory, which is the study of abstract machines.

Just as linguists use formal grammars to talk about language, computer scientists use abstract models of machines — automata — to talk about real computers. And in the same way that formal languages can be ordered in terms of their complexity, abstract machines can be ordered too. In fact, there’s a direct connection between the different levels of the Chomsky hierarchy, and the different kinds of theoretical machines that computer scientists have used to study computation.

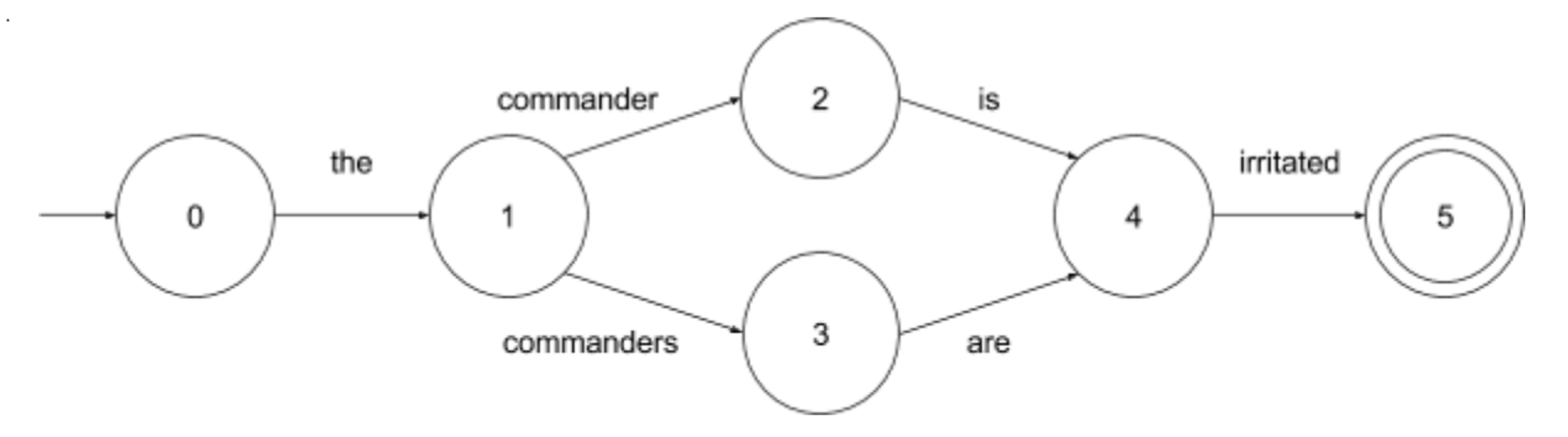

The simplest grammars — finite-state grammars — are so called because they correspond to the simplest automata: finite-state machines. And while a finite-state grammar (FSG) is designed to generate a language, a finite-state machine (FSM) can recognize one. To get a sense of how this works, we can take a look at this schematic for an FSM, which has been built to recognize just two English sentences: “the commander is irritated” and “the commanders are irritated”.

The schematic tells us that this machine can be in one of five possible states — those numbered circles in the diagram. The machine begins in state 0, as indicated by that first arrow, and transitions to the next state when it receives as input the word “the”. After that, it can move either into state 2 or 3, depending on whether it gets “commander” or “commanders”. When the machine’s gotten everything it’s looking for, it transitions into the final, or accepting, state. And, so, this particular FSM will only ever reach its final state if given one of the two sentences mentioned above.

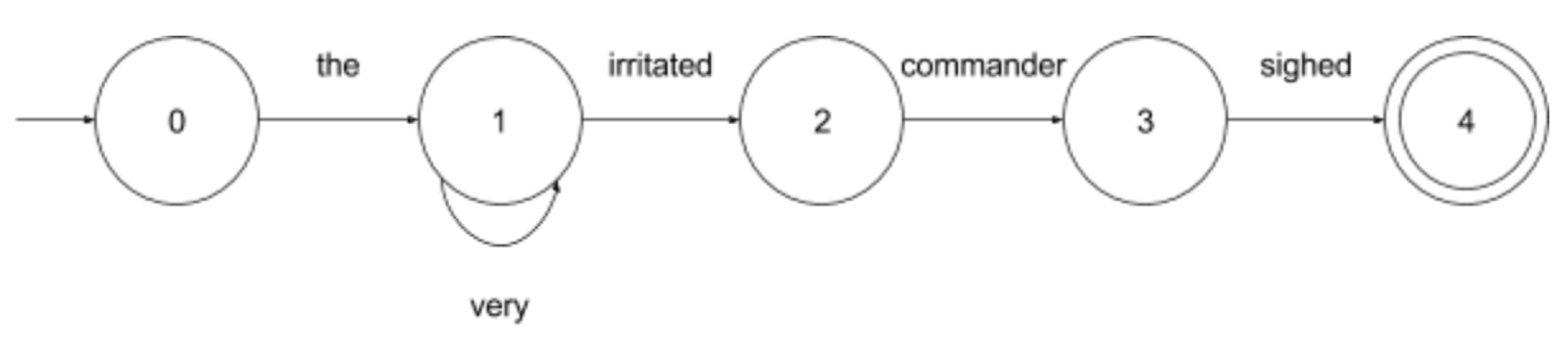

Now, FSMs do have some flexibility. For example, we can build one that has a loop in it, which means it can accept infinitely many sentences, as long as those sentences look something like “the very very . . . very very irritated commander sighed".

But as we saw in the episode, FSGs aren’t enough to capture the complexity of a language like English, and so neither are FSMs. If you gave an FSM a Type-2 language, it wouldn’t know what to do with it, and it would never reach its final, accepting state. Thankfully, though, there are more powerful automata. An abstract machine that can recognize a Type-2 grammar is called a pushdown automaton (PDA).

This name comes from the fact that these machines have memory, which takes the form of a stack onto which new information can be pushed down. And if you’ve ever operated a Pez dispenser, you know how this works! When we want to store something in the machine’s memory, we push a new symbol down onto the top of the stack, so it can be retrieved later on.

To get a sense of how PDAs operate, let’s take a look at a couple of the components of one that’s able to recognize sentences with an arbitrary number of ifs and eithers in them, so long as those sentences also have an equal number of thens and ors.

As you can see, a PDA looks a lot life an FSM, but in order to transition from one state to the next, it isn’t enough to just recognize some word. In the case of “if”, the machine also adds a “0” to its memory; in the case of “either”, it adds a “1”. Depending on how the machine is built, it can cycle through this process a whole bunch of times, and the stack can really fill up! Later on down the line, when it’s ready to start processing thens and ors, it continues to run smoothly, knowing that its memory did the job of keeping track of how many thens and ors it should expect to see. For every “0” in the stack, it should get a “then”, and for every “1”, it should get an “or”. When the stack’s empty, it’s done its job!

Of course, there are Type-1 and Type-0 grammars too, and each of them has their own corresponding automaton. A Linear-bounded Turing machine can recognize a Type-1 language, and a Turing machine can recognize Type-0. Their designs are a little complex, so we won’t go through them here, but it’s worth noting that the most powerful automata — named after pioneering computer scientist Alan Turing — actually form the theoretical basis for how computers work today!

While there’s still debate about where exactly human languages fit on the Chomsky hierarchy, it’s undeniable that it’s played a big role in both linguistics and computer science, and that it’s a trend likely to continue!

Discussion:

So how about it? What do you all think? Let us know below, and we’ll be happy to talk with you about how to capture the complexity of different kinds of languages. There’s a lot of interesting stuff to say, and we want to hear what interests you!

Previous Topic: Next Topic:

Quantifying Sets and Toasters Coming soon!